Master Semester Project

Evaluation of NPU Acceleration for Offloading 5G Software Wordloads

Full Report: HERE

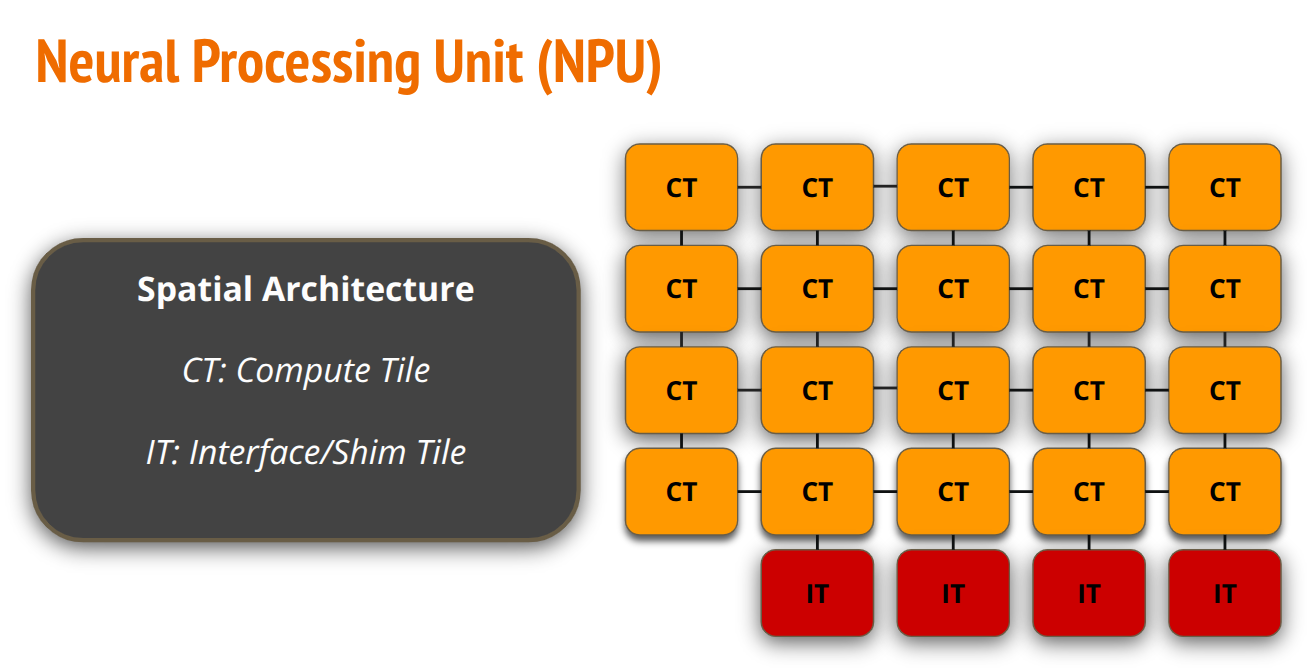

Neural Processing Units are recent hardware-specialized chips. Primarily composed of AI Engines and commonly incorporated in modern microprocessors, they serve as fast compute units for specific applications like Digital Signal Processing (DSP) and Machine Learning. The idea of the project was to explore the possibility to offload software 5G workloads into a NPU with XDNA AIE-ML architecture from AMD/Xilinx using MLIR-AIE, an open-source toolkit that targets AI Engines in both Versal and Ryzen AI products. Evaluating the performance compared to CPU-based implementations was a second objective. I took the liberty to benchmark against srsRAN, a 5G open-source stack, and benchmarked on Discrete Fourier Transforms (DFTs). In addition, I tried to explain to new developers how to efficiently program the AI Engines using MLIR-AIE, but also highlighted the encountered issues while programming in this architecture and toolchain. To finish, because it has been a project at the frontier of the research (very recent piece of hardware with no real projects), I’ve pointed the necessary documentation for new researchers who would like to dig into the software paradigm of AI Engines.

What about the details?

To efficiently program a NPU, I had to first experiment some developement tools, such as Ryzen AI Software and Riallto. This new paradigm brought me learning a lot on hardware-specialized chips in general, and the process of creating Kernels, which are actually functions executed on the compute units of the NPU. After weeks of learning, I chose to use MLIR-AIE, which is a MLIR-based toolchain that can target the architecture of my NPU, the XDNA/AIE-ML, specialized for ML workloads. You may ask why did I chose this architecture and not the XNDA/AIE, specialized for DSP applications? I was just not aware that Ryzen AI processors incorporate only the AIE-ML architecture (unfortunately in the ML area, that’s what people want in their processors). Still, I had to continue my project with that 😅.

Now, the main reason why I chose MLIR-AIE is because I needed C++ host code. The host code is the code used at runtime that start workloads on the NPU, where you actually execute Kernels, or said differently, when you move data in and out of the NPU. In fact srsRAN is built on C++, meaning my complex data has to be handled by C++ code. Keep in mind this “complex data” because its more important than you think!

Speaking of complex data type, srsRAN uses complex floats to represent its data. The AIE-ML architecture is specialized for low-precision arithmetics, presenting only bfloat16 as floating point data type available. Its a 8b mantissa float that can be used by the toolchain but has to be handled on the srsRAN side by casting the float’s in bfloat’s using libraries that implements this type: tatata I present Eigen 😂. Even if its a linear algebra library, it implements a bfloat on C++17, the version of srsRAN (C++ introduced it only on C++23).

Challenges

MLIR-AIE brought many challenges: it is based on a open-source compiler called Peano, coming from a fork of LLVM, LLVM-AIE, targeting AIE architectures. After weeks of issues to compile kernels requiring complex data types such as complex bfloat16, and after having searched every projects for any piece of code that would explain these troubles, I finally got the answer that Peano does not handle complex data types for the AIE-ML architecture (yes really…). This was a pain because how could I compute DFTs from srsRAN without complex numbers? Fortunately, AMD provides another compiler, closed-source, called Vitis AI (and initially supporting AIE architecture) that provides this support.

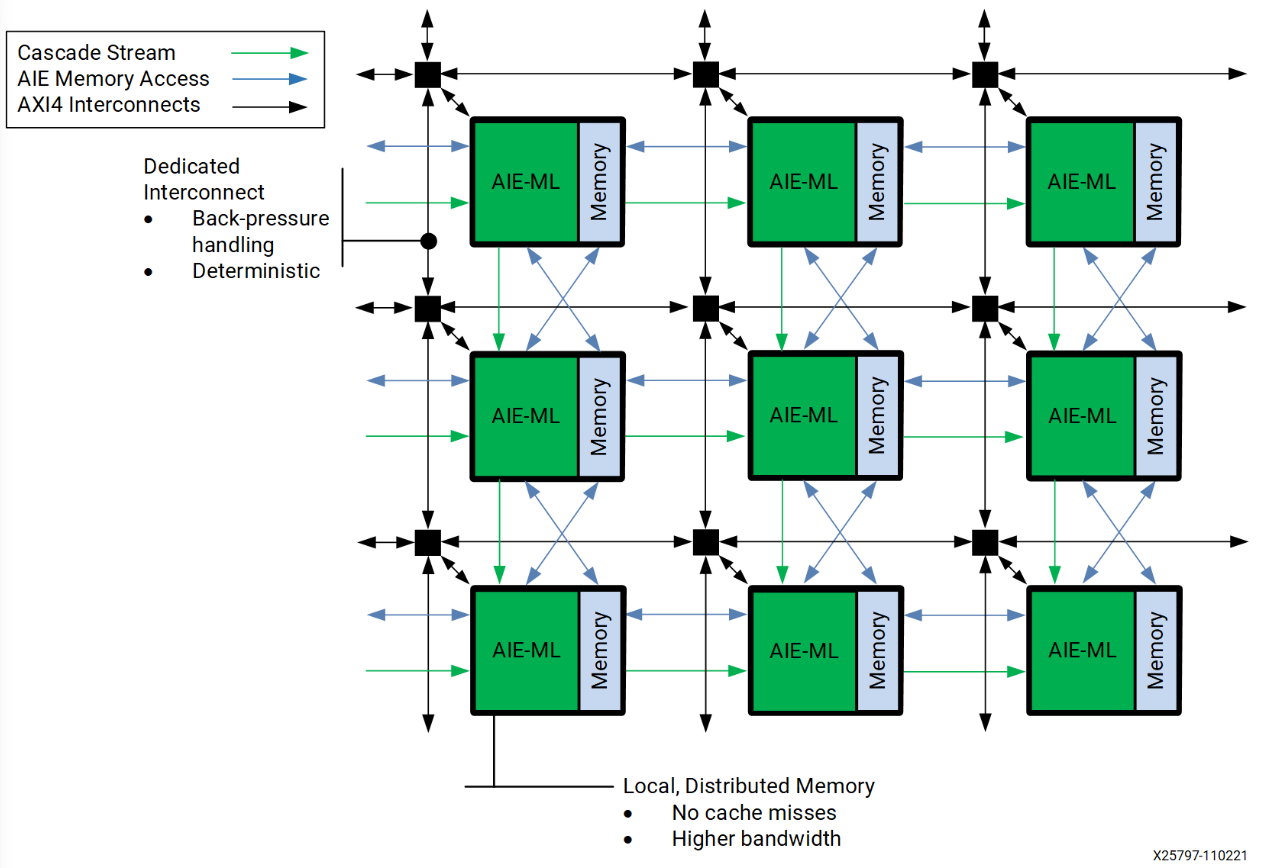

Now, developing Kernels is not an easy task as it requires the developers to be aware of the hardware details of the NPU. I want to express my gratitude for AMD that provides such as huge documentation (even if its messy). Projects such as Riallto does not highlight enough the necessity to read them. They think developers will always use the minimal amount of stuff of their libraries to create kernels, to just infer models for examples, or run simple matrix multiplications. But the key issue is that when you deviate just a bit from the standard things that can be achieved with these projects, you end up with messy code that does not work, and you don’t understand why. Don’t know that the maximum channels in and out of a compute tile is 4, and you are sending 3 types of data to it? Then you can just pray that chatGPT will find the answer for you, because the only place where this kind of things are written is in the docs of AMD.

The next table summarizes the important limitations that I encountered while working on this NPU with AIE-ML architecture. You may think its not that much, but with the lack of documentation provided by MLIR-AIE, I hope new developers will read my report with attention to not fall into these issues, each one takes several hours to solve. You can download my full report 📄 here if you want more details, and a text with less mess that this one!

| Name | Description |

|---|---|

| Twiddles | Numerical values have to be computed prior to invocation of the AIE‑API’s FFT algorithm because of the strict requirement for bfloat16 data type. |

| Lack of radix‑based FFT | The lack of radix‑3 and radix‑5 implementation for AIE‑ML architecture lets us compute N‑point DFTs only for N = 2ᵏ, for k < 2. Back‑to‑back ssrRAN uses N = 754, advanced considerations have to be done. |

| Floating point data type | XDNA AIE‑ML architecture does not support float32, but only bfloat16, which has lower precision. Float32 can be emulated in software, but slows down the computations. |

| Complex data type | LLVM‑AIE does not support FFT intrinsics of AIE‑API for complex types. The use of xchesscc coming with the Vitis Tools from AMD/Xilinx is mandatory. |

| XRT’s kernel overhead | After experimentation, XRT has an overhead between 30 and 60𝜇s when running a single kernel. The CPU takes around 3𝜇s to run one DFT. Running one DFT at a time was necessary to benchmark against srsRAN, as they compute one DFT at a time. Several solutions are discussed in the Result section, involving OpenCL for example. |

| Trace in MLIR‑AIE | Tracing is not implemented in IRON API. Any IRON file has to be converted using CTM implementation. |

| xchesscc & instructions file | When switching from Peano to xchesscc compiler, we highlight that the instructions file for the NPU is not hexadecimal, a requirement from XRT. |

| DMA channels in CTs | The number of input and output DMA channels of the tiles are an absolute hardware limitation. It limits the number of ObjectFIFOs, taking each a channel. As mentioned in the introduction, two in and two out DMA channels are available. |

What has been the final setup?

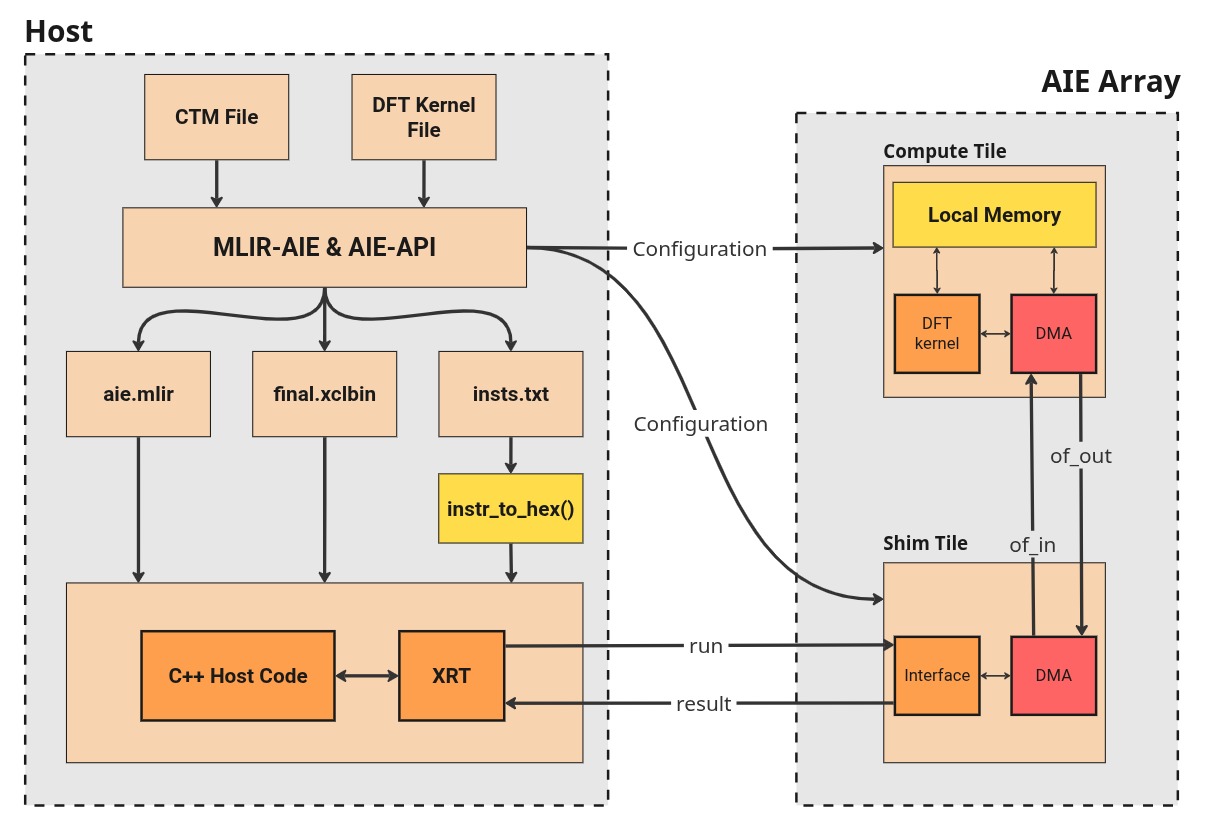

With MLIR-AIE, I got the chance to finish my kernel for computing DFTs of size N=512. The next image is the final schema of my setup

I don’t want to give too much details here, but the idea is pretty simple when you said it loud. The confguration of the tiles is done via MLIR-AIE. It setups an IT to bridge with external memory, and a CT to compute a DFT. They are linked with ObjectFIFOs, abstract elements implementing first-in first-out buffers to send data across the NPU. In addition, the toolchain outputs a binary file (xclbin) and instructions file that are used by a runtime called XRT to let the host code actually execute the DFT kernel on the NPU.

Conclusion

The term “evaluation” in the title of this project permitted me to conclude if yes or no, offloading DFTs on XDNA/AIE-ML NPU architecture is interesting. The answer is NO for many reasons, but even if I can moderate the no with a very small yes, let me explain the results.

The idea has been to benchmark the time needed for the NPU to compute a 512-point DFT against a CPU implementation from srsRAN. I can fairly answer that the NPU is not optimal. Kernels have overhead when starting them (resource allocations most of the time) of around 60 microseconds. Computing DFTs sequentially, one at a time, is not a good way of measuring efficiency in an NPU. But because srsRAN was computing DFTs like that, and I didn’t have time to build another benchmark, this is the main numerical result that I could made. In addition, the number of issues I got to just run complex-floats operations let me perplexe on the use of this ML architecture for DSP applications (seems a little bit logic… its in the name).

Still, I strongly disagree that NPU are not useful, we just need the right one: the XDNA/AIE architecture contained in Versal SoCs from AMD. A new project would be to offload DFTs on this chips and see how the CPU can compute other stuff rapidly, see if we can gain time and performance: that’s the main idea behind the NPU, relax the CPU.